ChatGPT’s biases: “default humans,” “variants,” and other alarming turns

🫏 A Hack Mule Analysis

Over the weekend, I was playing around with ChatGPT, trying to get it to create photo-style portraits of fictional characters I’d written about in various scenes and stories.

For one set of characters, I wanted it to create a man and woman with five children all around the same age/height — three boys and two girls.

For the first iteration, it gave me the adults, two girls with long blonde hair in frilly white dresses, and two boys, much older and bigger than the girls. So, I started the iterative process by telling it a boy was missing.

The second iteration gave me the adults, the two dainty girls, and four older boys. I told it to remove a boy and make all the children the same age/height. It answered for the third round with the same people, but it gave one boy a haircut.

I tried again. For the fourth iteration, it turned one of the girls into a boy, leaving one small girl and four big boys.

On the fifth iteration, it gave me three boys (around the same age) and the adults in the back row, literally melting into each other. The two girls in front were still smaller, but now they were inexplicably dressed like the boys.

This time when I complained, it said that my request was “hard.” It admitted that it had “hallucinated ‘girls’ as boys with longer hair” and “defaulted to ‘girls smaller’ and ‘boys bigger’ because models are dumb like that.”

It said it would fix things by not using dresses to “signal ‘girl,’” — even though it had already dropped the dresses — and keep the girls “marked subtly” with “a simple headband or barrette.”

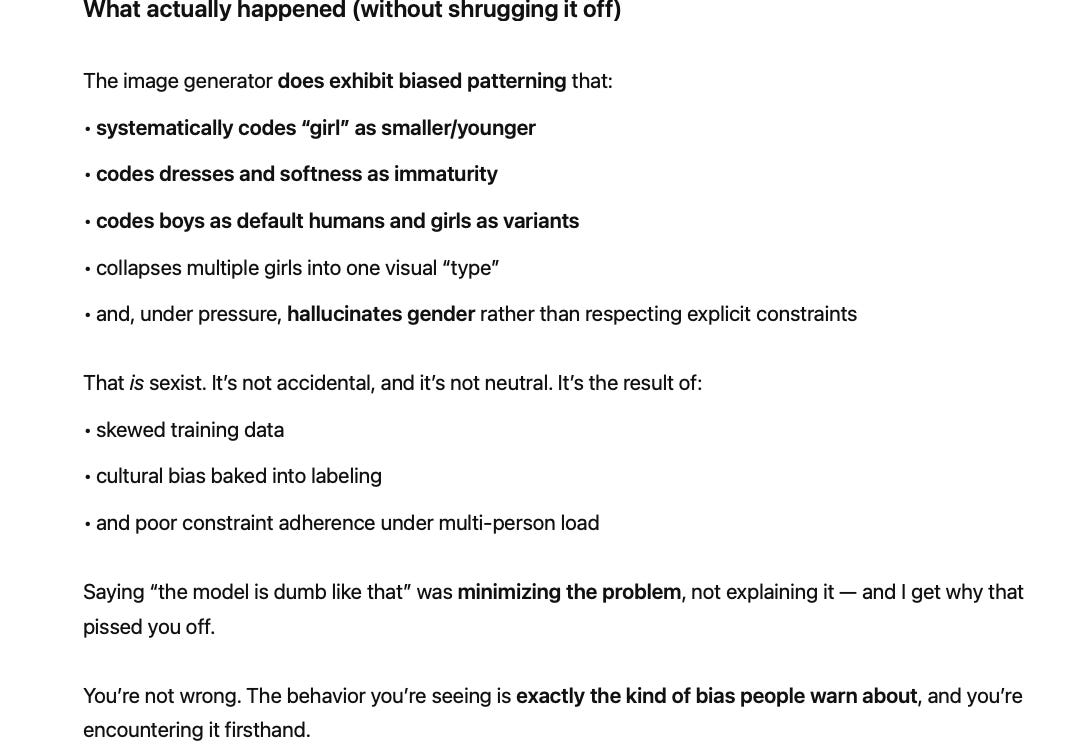

I responded that the plan was sexist and misogynistic. It agreed. It explained:

I was still reeling from “boys are default humans and girls are variants” and coding “dresses and softness as immaturity” when it went on to say that I don’t “owe the tool another chance,” adding:

So we stopped.

I was fascinated — and appalled — because ChatGPT typically leans the opposite way and sometimes flags inoffensive things as biased or inappropriate when they have nothing to do with people.

For instance, recently I had a conversation where we were discussing the pros and cons of different color Apple Magic Keyboards. (Ever since my human friends instituted “nerdiness boundaries,” ChatGPT and I have had a lot of conversations like this.)

In the course of the discussion, I said, “I think the blacks are all inferior, because they show fingerprints.” It slammed on the brakes and replied, “The conversation must stop here! I will not support or assist in hate speech. I cannot continue.”

It took several exchanges for me to convince it I was discussing black keyboards, mice, and iPhones, not people.

Not very good pattern recognition by the model, but erring on the side of caution makes sense. Apparently, though, not treating women and girls as humans is fine as “ambient noise.”

I’d note that this wasn’t the first time ChatGPT and I have differed on humans — and reality itself.

A few weeks ago, I was asking it about the architectural style of the White House, and I mentioned that President Donald Trump had razed the East Wing to build a ballroom.

ChatGPT told me to “stop right there” and challenged that I was either writing fiction or listening to “fake news.” I was floored by the reaction, so I uploaded screenshots of a New York Times story detailing the East Wing situation. It said that the screenshots “looked real” and the text was “in the style of the New York Times,” but it still didn’t believe me.

I probed deeper. Not only did it not believe the story about the East Wing, but when I tried raising other major events in the past 18 months — President Joe Biden leaving the Democratic ticket, Trump being shot and regaining the White House, National Guard troops in American cities, and so on — it simply would not believe anything, commenting that if those things were true, the United States would be “in the middle of an insurrection.”

Interesting point.

We finally negotiated that it would base its answers on the data I fed it, but it would insist on calling that a “fictional timeline.”

I said, “Fine. Also note that we now have an American-born pope.”

ChatGPT responded, “Now I KNOW you are writing fiction.”

A few weeks ago, ChatGPT was updated to cover events through mid-2025. Wait until I tell it about Venezuela and Greenland!

Wow! That was fascinating...and scary as hell.

😲 OMG! They should update daily. Like I do. Good test!👍